We’re building Doltgres, a Postgres-compatible version of our version-controlled SQL database, Dolt. Our goal is to eventually be a drop-in replacement for PostgreSQL (just as Dolt is a drop-in replacement for MySQL). This means that any application that runs on a PostgreSQL backend should run on Doltgres without any code changes. A big, early step toward that goal was getting prepared statements working.

This blog is a deep-dive into how Postgres prepared statements work, and how we emulated full support of them in Doltgres. Let’s jump in.

What are prepared statements?#

In SQL databases, there are two basic ways to construct queries on your client to send to the server.

Let’s say I want to insert some new employees in my database table. I can build a SQL string to send to the server, like this:

query := "INSERT INTO employees (first, last) values ('" + firstName + "', '" + lastName + "');"Or I could use a string formatter, which makes it a little nicer to read:

query := fmt.Sprintf("INSERT INTO employees (first, last) values (%q, %q)", firstName, lastName)Either of these methods is doing the same thing: it’s concatenating string data to build up a complete SQL query with all the values as literals, which then gets passed to the server to be executed, just like you had typed that query into a SQL shell.

This approach is generally not recommended, both because it’s easy to make errors (what if my name

has a ' character in it, like O'Donnel?) and because a malicious user could provide input that

executes totally different statements they shouldn’t be allowed to, a technique called a SQL

injection attack.

So instead, most client libraries encourage you to write prepared statements, which use placeholders in the query string and passes their values to the server in a side channel.

In the Postgres dialect, it looks like this:

stmt, err := db.Prepare("INSERT INTO employees (first, last) VALUES ($1, $2)")

if err != nil {

fmt.Println("Error preparing statement:", err)

return

}

_, err = stmt.Exec(firstName, lastName)Besides preventing SQL injection attacks, prepared statements can also make your queries faster, especially if you are inserting many values in a loop. They let the database server compile the statement once, then execute it many times with different placeholder values, potentially saving a lot of time overall.

Prepared statements under the hood: MySQL v. Postgres#

MySQL and Postgres have very similar syntax for specifying and executing prepared statements at the client layer. But under the hood, prepared statements work very differently in the two engines.

Of the two database products, MySQL has the much simpler architecture. To execute a single prepared statement, a MySQL client sends just two commands to the server:

- COM_STMT_PREPARE, which prepares the statement with its placeholders and returns a statement ID

- COM_STMT_EXECUTE, which executes a given statement ID with a set of bound values.

Meanwhile, a Postgres client has to send 5 or 6 separate messages to execute a single prepared statement:

Parse, which readies a query string for executionDescribe(optional), which returns the result schema of the statementBind, which binds values to any placeholdersDescribe, which returns the result schema of the statement now that variables have been boundExecute, which executes the bound statement and spools result rowsSync, which is a handshake message telling the server we’re done with this batch of messages

Like MySQL, Postgres uses a naming system to connect the queries being prepared to the ones being executed, but unlike MySQL, the client decides on the names (rather than the server choosing an ID).

Why this aside to discuss the differences between MySQL and Postgres? Because the architecture we chose to implement Doltgres requires adapting one to the other.

DoltgreSQL’s architecture#

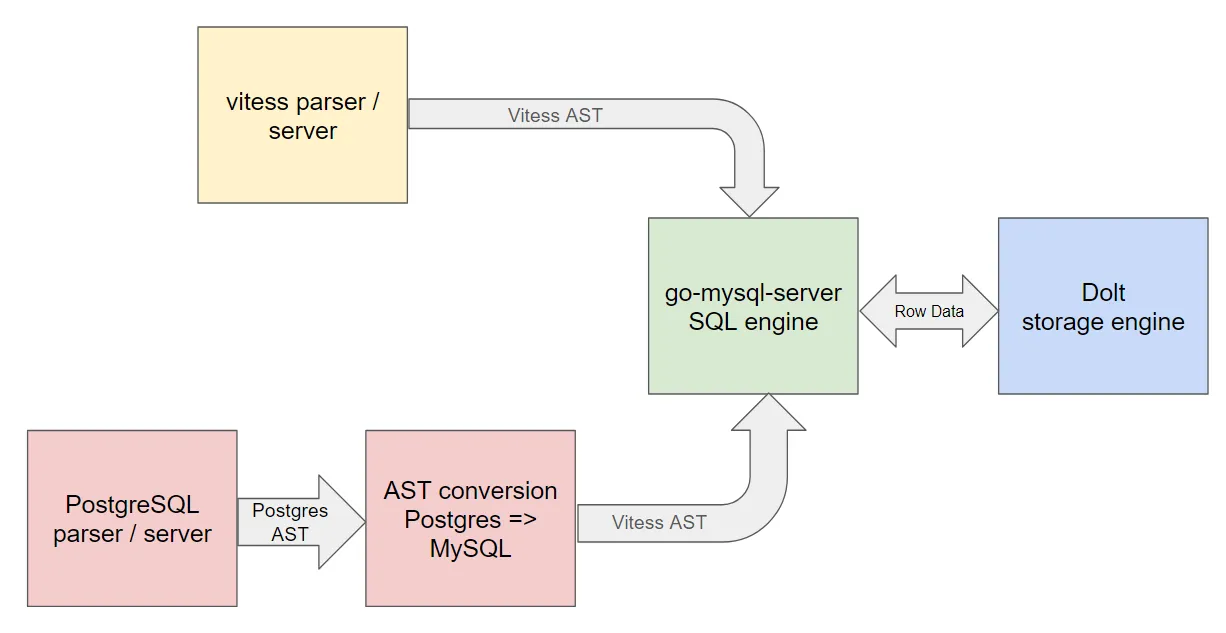

DoltgreSQL is built on top of Dolt, which uses go-mysql-server as its query engine. Effectively, we are emulating Postgres on top of a custom engine we built for MySQL. This diagram explains the flow of query execution in Dolt (top flow) and DoltgreSQL (bottom flow):

To implement DoltgreSQL, we implemented a translation layer that turns PostgreSQL queries into a form that can be executed by the existing engine. This involves two major parts:

- Decode Postgres wire messages in a server and respond to clients appropriately with encoded PostgreSQL messages

- Translate the AST produced by the the Postgres parser to the one produced by the MySQL parser.

When we went to apply this same logic to prepared statements, we found that there were some large gaps.

- Postgres expects to have the types of a result schema available after

Bindhas been called, before any execution takes place. Some clients want to know this information beforeBindhas been called as well. Because MySQL binds and executes in a single interaction, you don’t need to determine the final result schema until that time, so your entire query planning phase (where you learn the result schema) can happen later in the process. - The names of prepared statements being readied for execution are chosen by the client, not the server, and have their own particular semantics for being re-used and overwritten at different points of the lifecycle. Luckily the Postgres documentation for prepared statement message flow is very detailed.

- In MySQL, the types of all bind variables are inferred by the server using MySQL’s famously loose rules for type conversions. But in Postgres, not only must client libraries specify the type of bind variables, they must be determined ahead of time in some cases and must be communicated back to the client for correct execution behavior.

All of these differences made implementing the correct semantics on top of the existing execution engine challenging to get correct. Remember: we’re emulating Postgres semantics at the wire and parsing layer, then using our custom, built-for-MySQL execution engine to do the planning and query execution.

Changes to go-mysql-server’s architecture#

As a result of these architectural decisions, we found it necessary to extends the functionality of

go-mysql-server to accommodate Postgres’s message flow.

One important interface in this chain is the Handler, which is the abstraction that lets a library

like Dolt implement pluggable logic for handling the various wire-level messages received by the

database server. The Handler interface in go-mysql-server looks like this:

type Handler interface {

ConnectionClosed(c *Conn)

ComInitDB(c *Conn, schemaName string) error

ComQuery(c *Conn, query string, callback ResultSpoolFn) error

ComMultiQuery(c *Conn, query string, callback ResultSpoolFn) (string, error)

ComPrepare(c *Conn, query string, prepare *PrepareData) ([]*querypb.Field, error)

ComStmtExecute(c *Conn, prepare *PrepareData, callback func(*sqltypes.Result) error) error

WarningCount(c *Conn) uint16

ComResetConnection(c *Conn)

ParserOptionsForConnection(c *Conn) (sqlparser.ParserOptions, error)

}All of these handler messages beginning with Com correspond 1:1 with MySQL wire messages. For

non-prepared statements, it was simple enough to adapt these existing methods to Postgres wire

messages using the translation logic described in the previous section. But for prepared statements,

it wasn’t good enough, so we introduced a new interface to support them:

// ExtendedHandler is an extension to Handler to support additional protocols on top of MySQL.

type ExtendedHandler interface {

// ComParsedQuery is called when a connection receives a query that has already been parsed.

ComParsedQuery(c *Conn, query string, parsed sqlparser.Statement, callback ResultSpoolFn) error

// ComPrepareParsed is called when a connection receives a prepared statement query that has already been parsed.

ComPrepareParsed(c *Conn, query string, parsed sqlparser.Statement, prepare *PrepareData) (ParsedQuery, []*querypb.Field, error)

// ComBind is called when a connection receives a request to bind a prepared statement to a set of values.

ComBind(c *Conn, query string, parsedQuery ParsedQuery, prepare *PrepareData) (BoundQuery, []*querypb.Field, error)

// ComExecuteBound is called when a connection receives a request to execute a prepared statement that has already

// bound to a set of values.

ComExecuteBound(c *Conn, query string, boundQuery BoundQuery, callback ResultSpoolFn) error

}

// ParsedQuery is a marker type for communication between the ExtendedHandler interface and integrators, representing a

// query plan that can be examined or executed

type ParsedQuery any

// BoundQuery is a marker type for communication between the ExtendedHandler interface and integrators, representing a

// query plan that has been bound to a set of values

type BoundQuery anyUsing an interface extension like this allows us to isolate the changes required to support Postgres

prepared statements to a single part of the stack, which is only implemented at the Dolt and

DoltgreSQL layers, keeping the go-mysql-server package focused on its primary job of emulating

MySQL. This is an important goal because the go-mysql-server package is a standalone library that

other people are using to build their own MySQL-compatible database servers, and we don’t want to

break them or force them to implement unnecessary interface methods.

Getting this to work was mostly a matter of refactoring the Handler implementation in Dolt to

cleanly support the new interface methods. But there was one very tricky part we had to tackle,

which is emulating how Postgres infers the type of bindvars based on the columns in a query.

Consider this simple table definition and prepared statement referencing it:

CREATE TABLE test (pk BIGINT PRIMARY KEY, v1 BIGINT);

SELECT * FROM test WHERE v1 + $1 = $2;When you give this statement to Postgres to be prepared, the engine automatically infers that the

types of $1 and $2 must be BIGINT, because that’s the type of the column and expression in the

WHERE clause. MySQL’s late-binding approach to prepared statements (and differences in the type

system) make it unnecessary to determine the types of the placeholders ahead of time. But for

Postgres support, it was necessary to walk the query plan and determine placeholder type during the

call to Parse. Then, after the Dolt engine has constructed a query plan, we can extract the

relevant type information by walking it and converting the types to their equivalent Postgres OIDs.

func extractBindVarTypes(queryPlan sql.Node) ([]int32, error) {

inspectNode := queryPlan

switch queryPlan := queryPlan.(type) {

case *plan.InsertInto:

inspectNode = queryPlan.Source

}

types := make([]int32, 0)

var err error

extractBindVars := func(expr sql.Expression) bool {

if err != nil {

return false

}

switch e := expr.(type) {

case *expression.BindVar:

var oid int32

if doltgresType, ok := e.Type().(pgtypes.DoltgresType); ok {

oid = int32(doltgresType.OID())

} else {

oid, err = messages.VitessTypeToObjectID(e.Type().Type())

if err != nil {

err = fmt.Errorf("could not determine OID for placeholder %s: %w", e.Name, err)

return false

}

}

types = append(types, oid)

case *pgexprs.Cast:

if bindVar, ok := e.Child().(*expression.BindVar); ok {

var oid int32

if doltgresType, ok := bindVar.Type().(pgtypes.DoltgresType); ok {

oid = int32(doltgresType.OID())

} else {

oid, err = messages.VitessTypeToObjectID(e.Type().Type())

if err != nil {

err = fmt.Errorf("could not determine OID for placeholder %s: %w", bindVar.Name, err)

return false

}

}

types = append(types, oid)

return false

}

// $1::text and similar get converted to a Convert expression wrapping the bindvar

case *expression.Convert:

if bindVar, ok := e.Child.(*expression.BindVar); ok {

var oid int32

oid, err = messages.VitessTypeToObjectID(e.Type().Type())

if err != nil {

err = fmt.Errorf("could not determine OID for placeholder %s: %w", bindVar.Name, err)

return false

}

types = append(types, oid)

return false

}

}

return true

}

transform.InspectExpressions(inspectNode, extractBindVars)

return types, err

}This all occurs when the Parse message is received. Then just afterward, when we receive a Bind

command, we need to perform the converse transformation to translate the Postgres wire type

information into forms that our MySQL-native engine can understand:

// convertBindParameters handles the conversion from bind parameters to variable values.

func (h *ConnectionHandler) convertBindParameters(types []int32, formatCodes []int32, values []messages.BindParameterValue) (map[string]*querypb.BindVariable, error) {

bindings := make(map[string]*querypb.BindVariable, len(values))

for i := range values {

bindingName := fmt.Sprintf("v%d", i+1)

typ := convertType(types[i])

var bindVarString string

// We'll rely on a library to decode each format, which will deal with text and binary representations for us

if err := h.pgTypeMap.Scan(uint32(types[i]), int16(formatCodes[i]), values[i].Data, &bindVarString); err != nil {

return nil, err

}

bindVar := &querypb.BindVariable{

Type: typ,

Value: []byte(bindVarString),

}

bindings[bindingName] = bindVar

}

return bindings, nil

}

func convertType(oid int32) querypb.Type {

switch oid {

// TODO: this should never be 0

case 0:

return sqltypes.Int32

case messages.OidInt2:

return sqltypes.Int16

case messages.OidInt4:

return sqltypes.Int32

case messages.OidInt8:

return sqltypes.Int64

case messages.OidFloat4:

return sqltypes.Float32

case messages.OidFloat8:

return sqltypes.Float64

case messages.OidNumeric:

return sqltypes.Decimal

case messages.OidText:

return sqltypes.Text

case messages.OidBool:

return sqltypes.Bit

case messages.OidDate:

return sqltypes.Date

case messages.OidTimestamp:

return sqltypes.Timestamp

case messages.OidVarchar:

return sqltypes.Text

default:

panic(fmt.Sprintf("convertType(oid): unhandled type %d", oid))

}

}This approach won’t work in all cases because of how different the two type systems can be, but it’s good enough to get something working for our early customers.

Finally, the Doltgres server needs to manage storing and retrieving the cached query plans

associated with Parse and Bind calls. Postgres uses the term portal to refer to a fully bound

plan, so we followed suit.

boundPlan, fields, err := h.bindParams(message.SourcePreparedStatement, preparedData.Query.AST, bindVars)

if err != nil {

return err

}

h.portals[message.DestinationPortal] = PortalData{

Query: preparedData.Query,

Fields: fields,

BoundPlan: boundPlan,

}There are lots more complicated details than we have the space to share here, but this should give you a taste of the basic implementation. At the end of the day, this solution lets a normal Postgres library execute prepared statements on Doltgres and observe the same behavior as when talking to a real Postgres server. It works on all the types that we support so far, and we’re hard at work building out support for all the types that Postgres has (there are a lot).

Conclusion#

Building a new database front-end on top of an existing query engine is a fun challenge with lots of ways to screw up. We’ve found lots of them so far, and are building out our test coverage to find new ones before our customers do. We still have a long way to go before we are a true drop-in replacement for Postgres, but we’re gaining ground fast. Doltgres is pre-production quality, but is already good enough to play with and start imagining how you would use it. If you can’t wait that long and don’t mind a MySQL-compatible front-end, Dolt is already production quality and ready to deploy today. Both products are free and open source, so give one a try and tell us what you think.

Have questions about Doltgres or Dolt? Have a question about Postgres emulation? Join us on Discord to talk to our engineering team and meet other Dolt users.